Use Kappa to Describe Agreement Interpret

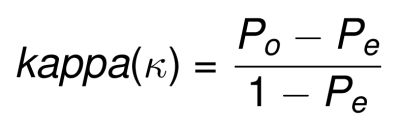

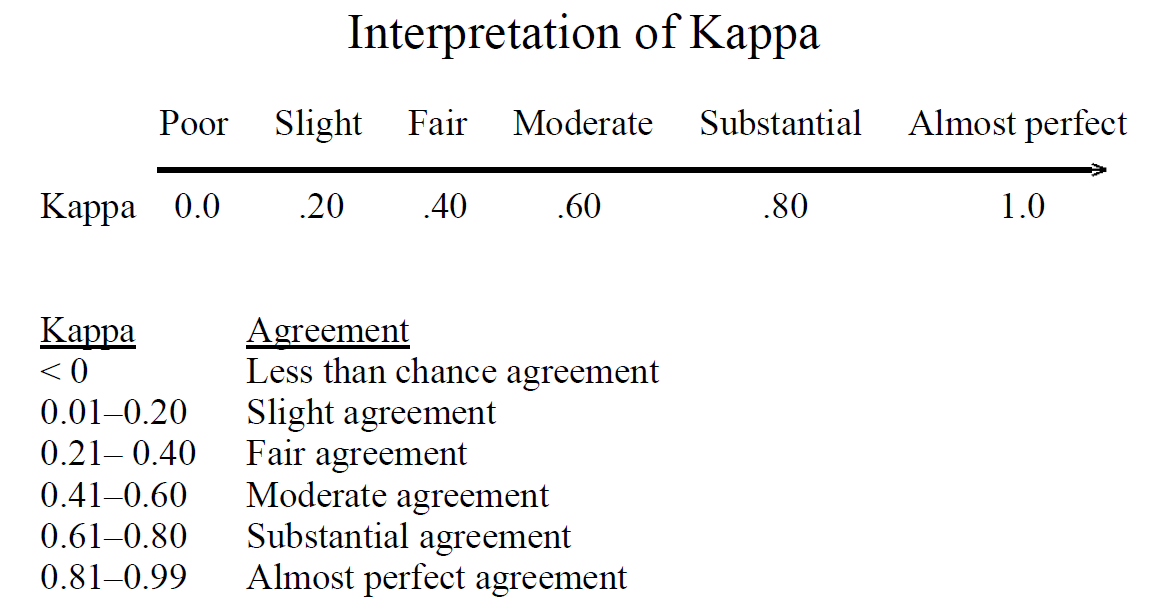

This article describes how to interpret the kappa coefficient which is used to assess the inter-rater reliability or agreement. A negative kappa represents agreement worse than expected or disagreement.

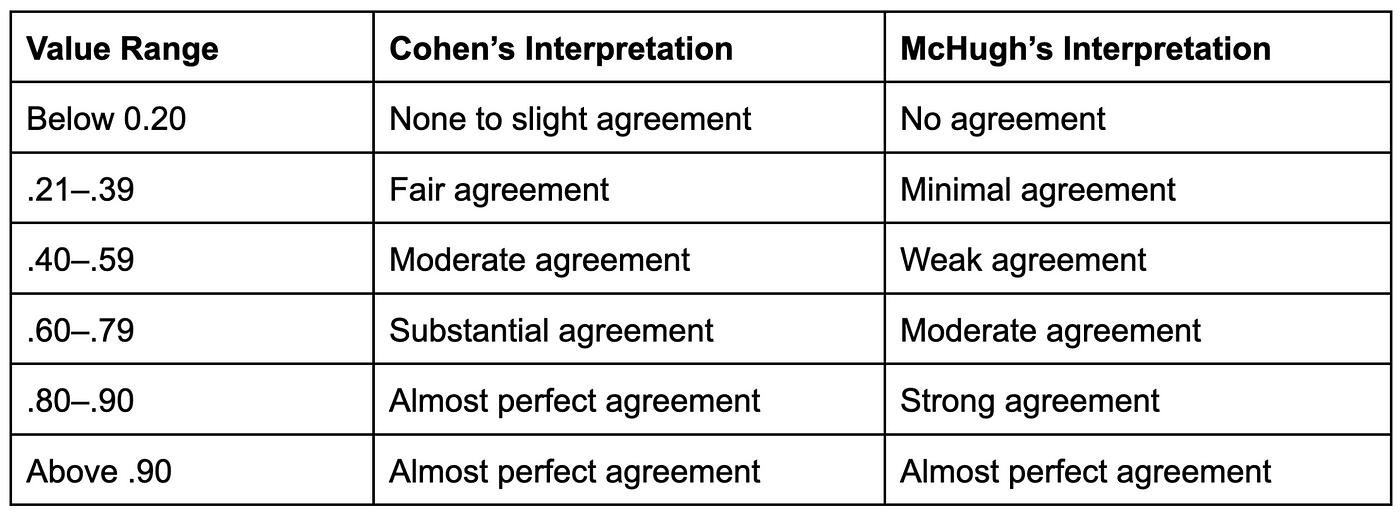

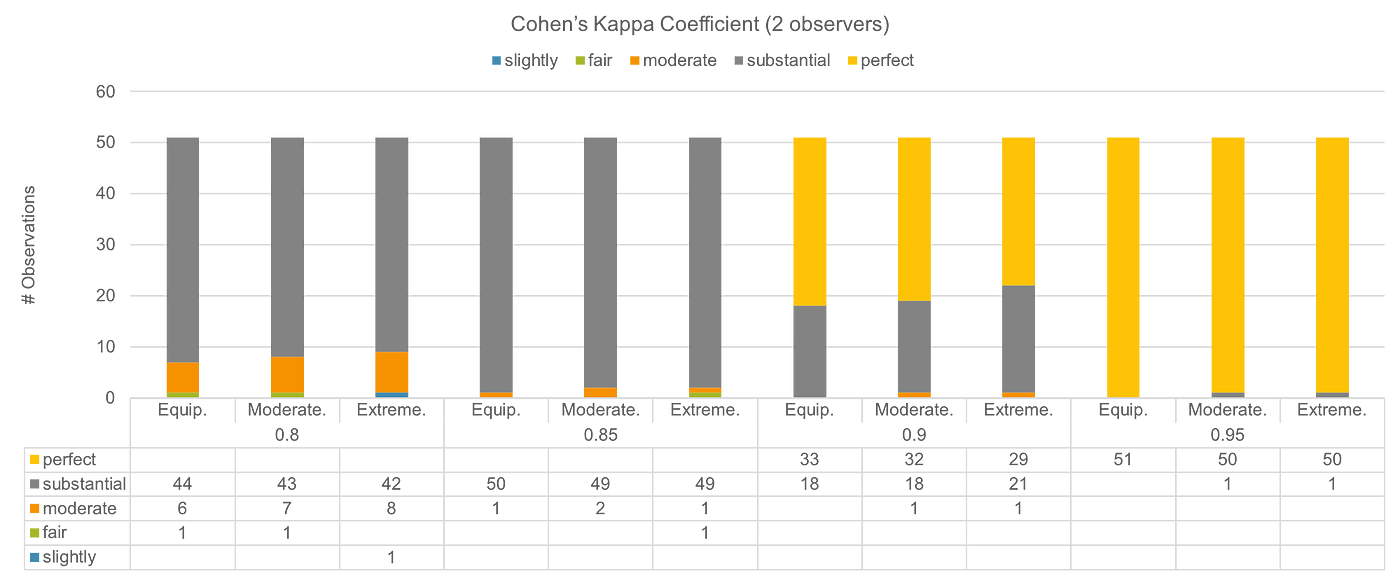

Interpretation Of Cohen S Kappa Statistic 18 For Strength Of Agreement Download Table

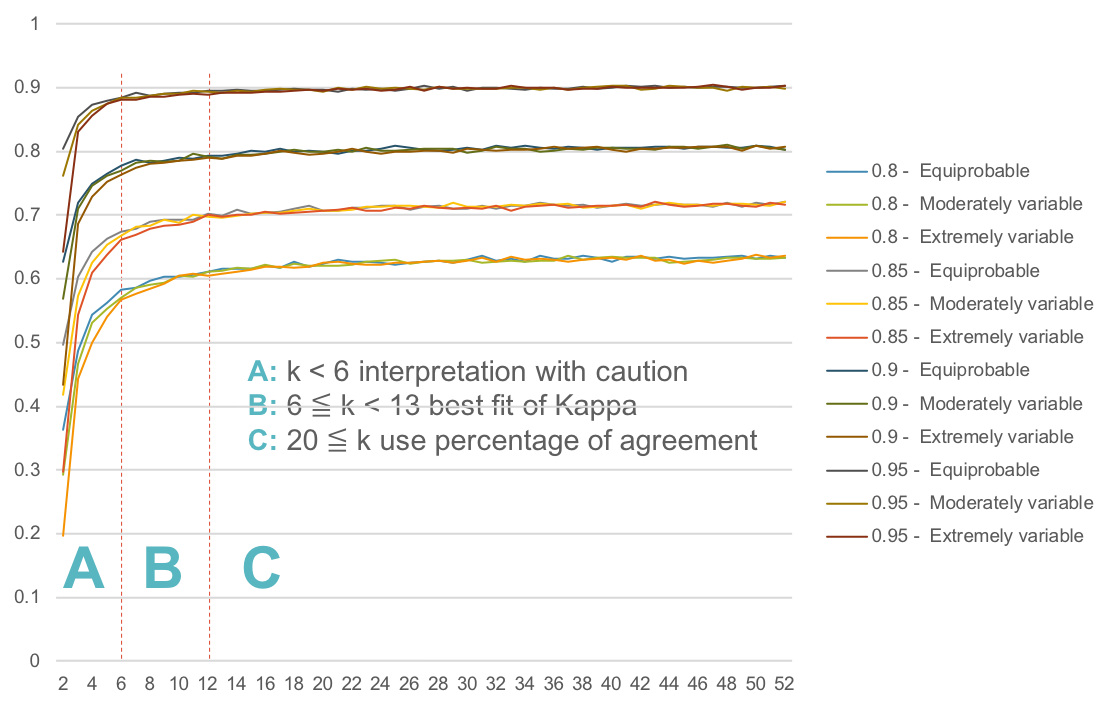

Kappa values range from 1 to 1.

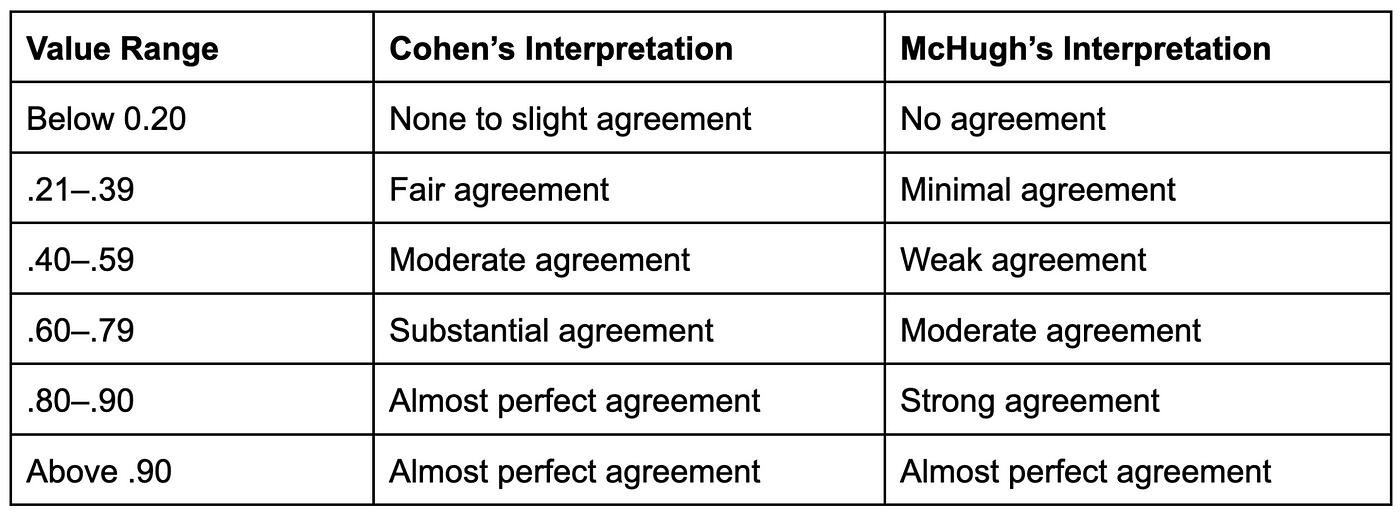

. Cohens kappa κ can range from -1 to 1. Look in the Intraclass Correlation Coefficient table under the Intraclass Correlation column. Based on the guidelines from Altman 1999 and adapted from Landis Koch 1977 a kappa κ of 593 represents a moderate strength of agreement.

Agreement would equate to a kappa of 1 and chance agreement would equate to 0. Component of discontinued operations. Use kappa statistics to assess the degree of agreement of the nominal or ordinal ratings made by multiple appraisers when the appraisers evaluate the same samples.

The Single Measures value is used when an individual rating is the level of observation in the outcome. You can see that Cohens kappa κ is 593. The purpose of this study was to devise and describe a reliable and valid test the.

So residents in this hypothetical study seem to be in moderate agree-ment that noon lectures are not that helpful. The response of kappa to differing baserates was examined and methods for. Can also be used to calculate se.

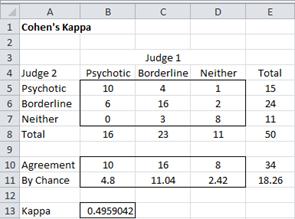

Kappa is always less than or equal to 1. How to interpret Kappa. Cohens Kappa is an index that measures interrater agreement for categorical qualitative items.

The kappa statistic is used to describe inter-rater agreement and reliability. The steps for interpreting the SPSS output for the ICC. We describe how to construct and interpret Bangdiwalas 1985.

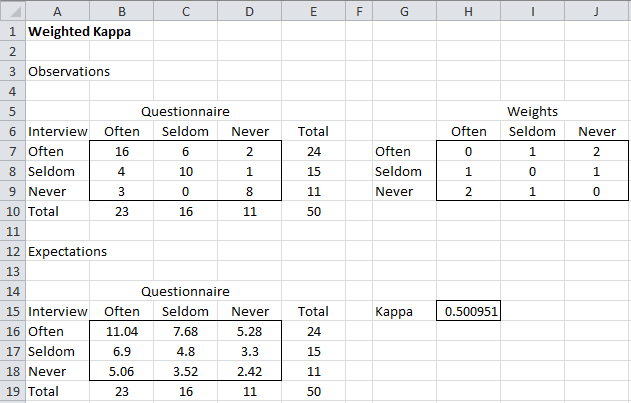

Cohens kappa is a metric often used to assess the agreement between two raters. Recently Added Questions A gain or loss from the extinguishment of debt generally should be A gain or loss from the extinguishment of debt generally should be classified in the income statement as an A. Inter-rater agreement - Kappa and Weighted Kappa.

Measurement of the extent to which the raters assign the same score to the same variable is called inter-rater reliability. Interpreting the results from a Fleiss kappa analysis Fleiss kappa κ is a statistic that was designed to take into account chance agreement. Kappa Cohens kappa is a measure of the agreement between two raters who have recorded a categorical outcome for a number of individuals.

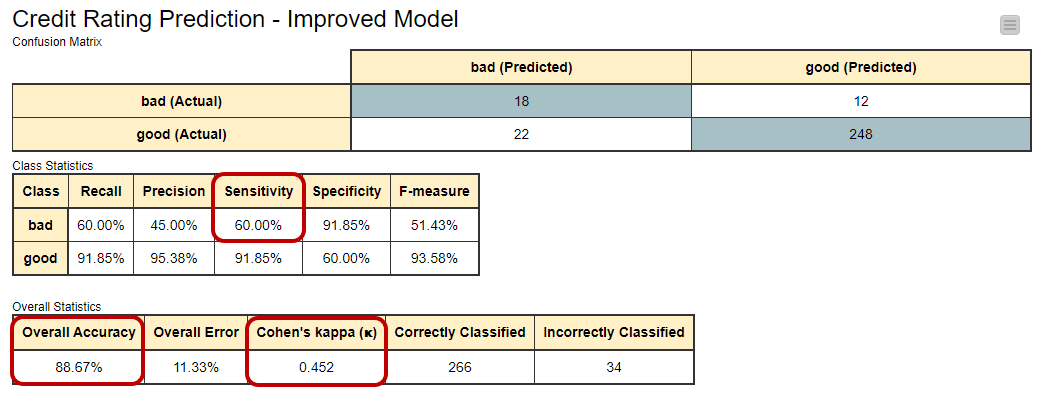

For Table 1018 use kappa to describe agreement. Good and bad based on their creditworthiness we could then measure. Fleiss kappa is one of many chance-corrected agreement coefficients.

In most applications there is usually more interest in the magnitude of kappa than in the statistical significance of kappa. It can also be used to assess the performance of a classification model. When Kappa 1 perfect agreement exists.

These coefficients are all based on the average observed proportion of agreement. In datamining it isusually. When Kappa 0 agreement is weaker than expected by chance.

A value of 1 implies perfect agreement and values less than 1 imply less than perfect agreement. In rare situations Kappa can be negative. More formally Kappa is a robust way to find the degree of agreement between two ratersjudges where the task is to put N items in K mutually exclusive categories.

The Average Measures value is used when the average of several ratings is the level of. Determining consistency of agreement between 2 raters or between 2 types of classification systems on a dichotomous outcome. This is a sign that the two observers agreed.

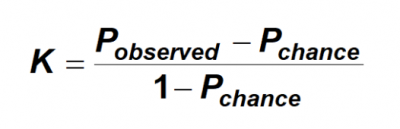

Baserate Matters Cornelia Taylor Bruckner Sonoma State University Paul Yoder Vanderbilt University Abstract Kappa Cohen 1960 is a popular agreement statistic used to estimate the accuracy of observers. When assessing the concordance between two methods of measurement of ordinal categorical data summary measures such as Cohens 1960 kappa or Bangdiwalas 1985 B-statistic are used. All kappa-lik e coefficients have the particularit y of accounting for c hance agreement ie.

When Kappa 1 perfect agreement exists. Table 2 may help you visualize the interpretation of kappa. A large negative kappa represents great disagreement among raters.

Pitfalls in the use of kappa when interpreting agreement between multiple raters in reliability studies. Low negative values 0 to 010 may generally be interpreted as no agreement. Interpreting Kappa in Observational Research.

However a picture conveys more information than a single summary measure. Reliability of nursing observations often is estimated using Cohens kappa a chance-adjusted measure of agreement between observer RNs. In terms of our example even if the police officers were to guess randomly about each individuals behaviour they would end up agreeing on some individuals behaviour simply by chance.

Data collected under conditions of such disagreement among raters are not meaningful. The higher the value of kappa the stronger the agreement as follows. This is the proportion of agreement over and above chance agreement.

Kappa statistic is applied to interpret data that are the result of a judgement rather than a measurement. For example if we had two bankers and we asked both to classify 100 customers in two classes for credit rating ie. Kappa values range from 1 to 1.

Cohens kappa factors out agreement due to chance and the two raters either agree or disagree on the category that each subject is assigned to the level of agreement is not weighted. Creates a classification table from raw data in the spreadsheet for two observers and calculates an inter-rater agreement statistic Kappa to evaluate the agreement between. When interpreting kappa it is also important to keep.

When Kappa 0 agreement is the same as would be expected by chance. However use of kappa as an omnibus measure sometimes can be misleading. For the amount of agreement expected between the tw o raters if.

The higher the value of kappa the stronger the agreement as follows. Given the design that you describe ie five readers assign binary ratings there cannot be less than 3 out of 5 agreements for a given subject. The items are indicators of the extent to which two raters who are examining the same set of categorical data agree while assigning the data to categories for example classifying a tumor as malignant or benign.

Interpretation Of Kappa Statistic Download Table

Weighted Cohen S Kappa Real Statistics Using Excel

Cohen S Kappa Real Statistics Using Excel

Cohen S Kappa Free Calculator Idostatistics

The Kappa Coefficient Of Agreement This Equation Measures The Fraction Download Scientific Diagram

Pdf Understanding Interobserver Agreement The Kappa Statistic Semantic Scholar

Cohen S Kappa In R Best Reference Datanovia

Inter Annotator Agreement Iaa Pair Wise Cohen Kappa And Group Fleiss By Louis De Bruijn Towards Data Science

Interpretation Of Kappa Values The Kappa Statistic Is Frequently Used By Yingting Sherry Chen Towards Data Science

Pdf Understanding Interobserver Agreement The Kappa Statistic Semantic Scholar

Inter Annotator Agreement An Introduction To Cohen S Kappa Statistic By Surge Ai Medium

Interpretation Of Kappa Values The Kappa Statistic Is Frequently Used By Yingting Sherry Chen Towards Data Science

Calculation Of The Kappa Statistic Download Scientific Diagram

Interrater Reliability The Kappa Statistic Biochemia Medica

Cohen S Kappa What It Is When To Use It How To Avoid Pitfalls Knime

Cohen S Kappa Sage Research Methods

Comments

Post a Comment